“Biases are commonly considered one of the most detrimental effects of artificial intelligence (AI) use. The EU is therefore committed to reducing their incidence as much as possible. However, the existence of biases pre-dates the creation of AI tools. All human societies are biased – AI only reproduces what we are. Therefore, opposing this technology for this reason would simply hide discrimination and not prevent it. It is up to human supervision to use all available means – which are many – to mitigate its biases. – European Parliament (STOA).

You were numerous to be interested in our previous news item “JRC: New algorithm for risks & disasters management”. Just so you remember, the European Commission’s new & open-source software algorithm developed by the Joint Research Centre (JRC) can segment social media messages to identify, verify and help manage disaster events -such as floods, fires or earthquakes- in real-time.

Please see below the latest news on algorithms, and more generally speaking, AI and technology, from the EU, not forgetting a news about our sister project ENGAGE and its chatbot for first responders and then societal resilience.

Last July 25, at the request of the Panel for the Future of Science and Technology (STOA) and within the Directorate-General for Parliamentary Research Services (EPRS) of the Secretariat of the European Parliament, the Scientific Foresight Unit published the study “Auditing the quality of datasets used in algorithmic decision-making systems”: “This study begins by providing an overview of biases in the context of artificial intelligence, and more specifically to machine-learning applications. The second part is devoted to the analysis of biases from a legal point of view. The analysis shows that shortcomings in this area call for the implementation of additional regulatory tools to adequately address the issue of bias. Finally, this study puts forward several policy options in response to the challenges identified”.

Introduction of the study: Biases are commonly considered one of the most detrimental effects of artificial intelligence (AI) use. The European Union (EU) is therefore generally committed to reducing their incidence as much as possible. However, mitigating biases is not easy, for several reasons. The types of biases in AI-based systems are many and different. Detecting them is a challenging task. Nevertheless, this is achievable if we manage to: i) increase awareness in the scientific community, technology industry, among policy-makers, and the general public; ii) implement AI with explainable components validated with appropriate benchmarks; and iii) incorporate key ethical considerations in AI implementation, ensuring that the systems maximise the wellbeing and health of the entire population […].

[FULL STUDY]

---

The same day, July 25, the European Parliamentary Research Service (EPRS), through its Scientific Foresight Unit (STOA), published a briefing on “Ethical and societal challenges of the approaching technological storm”: Supported by the arrival of 5G and, soon 6G, digital technologies are evolving towards an artificial intelligence-driven internet of robotic and bionano things. The merging of artificial intelligence (AI) with other technologies such as the internet of things (IoT) gives rise to acronyms such as 'AIoT', 'IoRT' (IoT and robotics) and 'IoBNT' (IoT and bionano technology). Blockchain, augmented reality and virtual reality add even more technological options to the mix. Smart bodies, smart homes, smart industries, smart cities and smart governments lie ahead, with the promise of many benefits and opportunities. However, unprecedented amounts of personal data will be collected, and digital technologies will affect the most intimate aspects of our life more than ever, including in the realms of love and friendship. This STOA study offers a bird's eye perspective of the key societal and ethical challenges we can expect as a result of this convergence, and policy options that can be considered to address them effectively.

[FULL BRIEFING]

---

ENGAGE sister project - Engage Society for Risk Awareness and Resilience

“One of the objectives of project ENGAGE is to identify how innovative social media technologies, with a significant focus on AI, can contribute to societal resilience. To promote this objective, we highlighted several vital issues that should be considered in adapting such technologies to the field of emergencies, disasters, and societal resilience and the delicate relationship of authorities and first responders with AI technologies […]

We highlighted several technologies that can be implemented in building societal resilience. For example, social media listening, using AI capabilities to monitor social media channels for tags, mentions, topics and specific keywords. Social media listening allows authorities and first responders to identify very fast-rising and dominant themes in social media […]

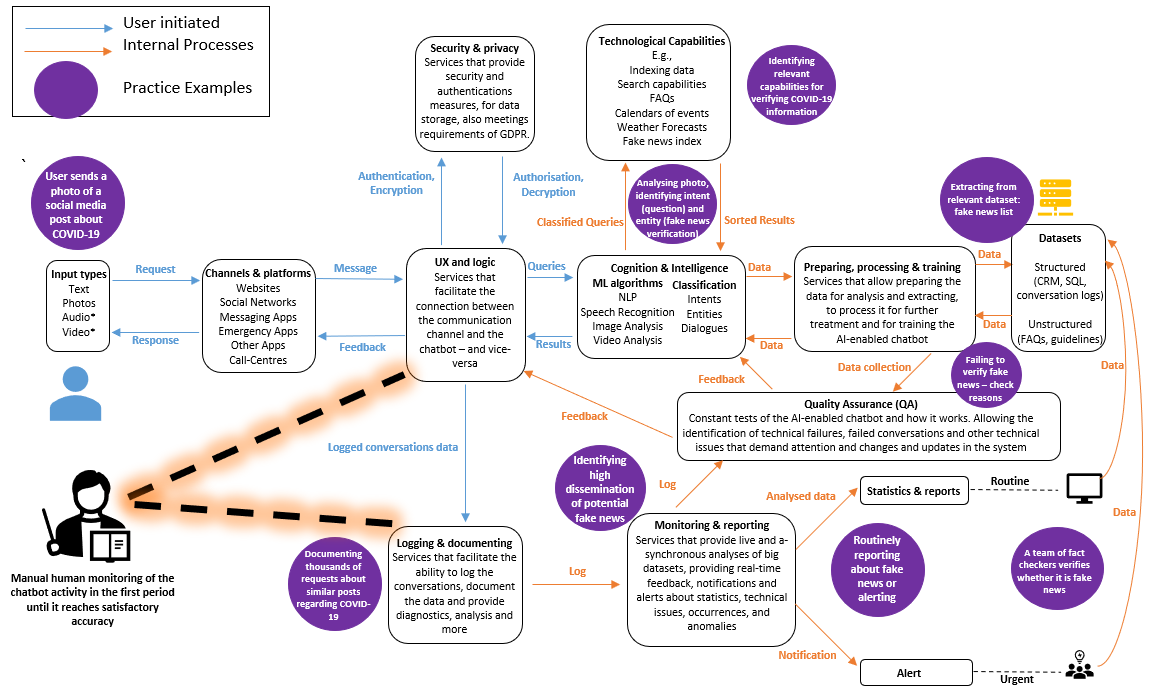

In the ENGAGE project, we developed a blueprint of an AI-enabled chatbot to converse with the public before, during, and after emergencies and disasters. In addition, we suggested how future chatbots can harness cutting-edge, state-of-the-art technologies for collecting and providing information to the public about disasters, augmenting some of the first responders’ roles in communicating with the public […]”

***

USEFUL LINKS

- STOA website

- Parliament Think Tank page

- Parliament Think Tank blog

- ENGAGE news on Chatbot as a first responder

- ENGAGE description of chatbot blueprint

- ENGAGE Deliverable 3.2 – Exploration of innovative use of communication and social media technologies

- CORE News on JRC: New algorithm for risks & disasters management

***

Featured image of the article: Shutterstock.com.